The analogue chip that could increase AI efficiencyRyan Lavine/IBM

The energy consumption of AI research is massive and expanding, and there is a global shortage of the digital chips often utilised. However, an analogue computer chip can run an AI speech recognition model 14 times more effectively than regular processors.

IBM Research, who created the gadget, denied New Scientist’s request for an interview and offered no comment. However, in a paper explaining the project, the team claims that the analogue chip can ease AI development bottlenecks.

The graphic processing units (GPUs) that were initially developed to operate video games are now in high demand due to their long history of use in the training and execution of artificial intelligence models. Researchers have found that between 2012 and 2021, AI will increase its energy consumption by a factor of 100, with the vast majority of that increase coming from the use of fossil fuels. Due of these concerns, it has been suggested that the ever-increasing size of AI models will soon face a wall.

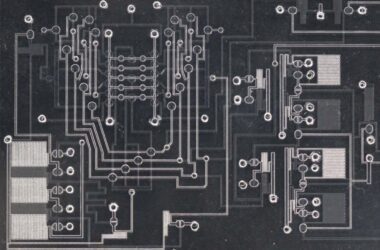

Current AI gear also suffers from severe bottlenecks due to the number of times data must be transferred from memory to processors. IBM has exhibited a scalable version of a technology called the analogue compute-in-memory (CiM) processor, which can conduct calculations entirely within its own memory.

The CiM used in IBM’s device consists of 35 million “phase-change memory cells,” which can be programmed to be in either of two states (like transistors in a computer chip) or anywhere in between.

This variation in state is particularly important because it can be used to model the strength of connections between neurons in a neural network, a type of artificial intelligence that mimics the way human brains store information and skills as numerical values. Without having to do millions of operations to recall or store data in far-flung memory chips, the new chip is able to store and process these weights.

The processor achieved an efficiency of 12.4 trillion operations per second per watt in speech recognition tests. This can be up to 14 times faster than standard processors.

Despite Intel’s Hechen Wang’s claims that the chip is “far from a mature product,” experiments have shown that it can work effectively on popular AI neural network types used today (Convolutional Neural Networks and Recurrent Neural Networks are two of the most well-known examples). This means that the chip may eventually be able to support popular applications like ChatGPT.

“Extremely personalised chips can deliver unprecedented productivity. However, as Wang points out, doing so reduces the likelihood of success. An analogue-AI chip, also known as an analogue compute-in-memory chip, has its own set of constraints, comparable to those of a graphical processing unit (GPU). If AI keeps developing at its current rate, though, highly personalised chips will become increasingly commonplace.

Although the chip was developed for use in IBM’s voice recognition studies, Wang claims it may have additional applications. A CNN or RNN “won’t be completely useless or e-waste” as long as people are still using them, he says. Furthermore, it has been shown that analogue-AI, also known as analogue compute-in-memory, uses less power and silicon than conventional central processing unit (CPU) or graphics processing unit (GPU) architectures.

FAQs

1. What is the impact of AI research on energy consumption?

AI research has led to a substantial increase in energy consumption, with projections showing a hundredfold rise between 2012 and 2021 due to the use of fossil fuels.

2. How can analogue chips address AI development bottlenecks?

Analogue chips, specifically the analogue compute-in-memory (CiM) processor, offer an innovative solution by conducting calculations within their own memory, reducing data transfer bottlenecks.

3. What is the significance of the CiM processor’s architecture?

The CiM processor’s architecture, consisting of phase-change memory cells, allows for efficient modeling of neural network connections and storage of numerical values, boosting AI performance.

4. How does the CiM processor perform in speech recognition tests?

The CiM processor achieved impressive efficiency, performing 12.4 trillion operations per second per watt in speech recognition tests, up to 14 times faster than standard processors.

5. Can the CiM processor support popular AI applications like ChatGPT?

Yes, experiments have shown that the CiM processor can effectively support popular AI neural network types like Convolutional Neural Networks and Recurrent Neural Networks, making it suitable for applications like ChatGPT.